Augmented Reality

Die AR Helfer - Teil 1

16. November 2017

In der neuen Blogreihe AR Helfer teilen wir unsere Ideen für eine verbesserte Usability bei Augmented Reality Anwendungen. Über das nächste Jahr hinweg werden wir damit ein kleines Nachschlagewerk für verschiedene AR Usability Features erstellen.

Augmented Reality (AR) ist seit diesem Sommer bei der breiten Masse angekommen und hilft vielen Menschen bei ihren täglichen Arbeiten. Tim Cook der CEO von Apple sagt sogar, dass man Augmented Reality mit der Idee des Smartphones vergleichen kann. Wie beim Smartphone muss sich die breite Masse jedoch erst an diese neue Technologie gewöhnen. Viele User wissen noch nicht genau wie Augmented Reality funktioniert und wie sie entsprechende Apps bedienen sollen.

Da wir uns nun schon seit mehr als 6 Jahren mit diesem spannenden Thema beschäftigen, haben wir uns natürlich auch viele Gedanken über das Thema Usability im Bereich Augmented Reality gemacht. Aus diesen Gedanken sind schon einige „AR Helfer“ entstanden, die unseren Nutzern die Verwendung von Augmented Reality erleichtern. Beginnen möchten wir heute gleich mit zwei „AR Helfern“, die teilweise zwar sehr logisch sind, aber in vielen Augmented Reality Apps einfach trotzdem fehlen.

Du brauchst ein Target, Mann!

Inzwischen gibt es die verschiedensten Formen von Augmented Reality. Einige davon bauen auf Bilderkennung (image-based tracking) auf und verwenden sogenannte Targets – gedruckte Bilder oder Formen. Zwei sehr prominente Beispiele für Frameworks, die Target-basierte Augmented Reality nutzen, sind ARToolKit und Vuforia. Bei diesen Frameworks verwendet man das Target, um die Position für die digitalen Inhalte in der realen Welt zu bestimmen. Somit muss man die Kamera des Smartphones zuerst auf dieses Target richten, damit das Augmented Reality Erlebnis beginnen kann.

Ein Problem, das mit dieser Technik einhergeht ist, dass viele User eigentlich gar nicht wissen, wie diese Art von Augmented Reality überhaupt funktioniert. Sie öffnen die App und werden zum Anzeigen der Inhalte mit einem Kamerastream konfrontiert, mit dem sie nicht wirklich etwas anfangen können. In vielen Fällen wird die App aus Frustration anschließend geschlossen und im schlimmsten Fall gleich deinstalliert.

Der erste, und wirklich sehr einfache, unserer AR Helper richtet sich genau an dieses Problem. Um es zu lösen, blenden wir ein Overlay mit einer einfachen Kurzerklärung direkt über dem Kamerastream ein. Es besteht ebenfalls die Möglichkeit, dieses Overlay zu timen und das Timing für die jeweilige Nutzergruppe der App anzupassen. In einigen Fällen sollte das Overlay immer transparent über dem Kamerastream präsent sein. In anderen Fällen reicht es jedoch, erst nach einigen Sekunden in denen kein Target im Kamerastream erkannt wird, das erklärende Overlay einzublenden. Wir haben auch gleich zwei Video-Beispiele für die verschiedenen Timings für dich vorbereitet:

Folge mir, kleines 3D Modell!

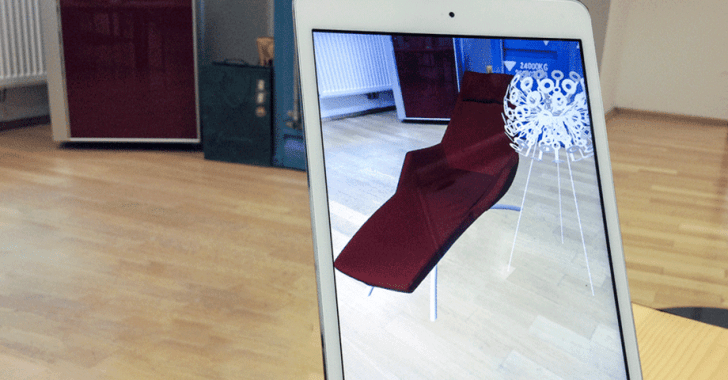

Der zweite AR Helfer richtet sind an das bereits oben besprochene Problem hat aber ein etwas anderes Anwendungsgebiet. Dieser Helfer bietet die Möglichkeit, die digitalen Inhalte (z. B. ein 3D Modell eines Rollos) direkt vor der Kamera zu sehen. Ohne, dass sich ein Target im Kamerastream befindet. Dadurch stehen die Nutzer nicht vor einem leeren Kamerastream und können sich schon vorstellen was sie beim Augmented Reality Erlebnis so erwartet. Außerdem sind sie eher versucht, sich auf die Suche nach dem Target zu machen, wenn sie das Ziel schon einmal vor Augen bzw. vor der Kamera haben.